HDDM is a python toolbox for hierarchical Bayesian parameter estimation of the Drift Diffusion Model (and now many other models!). Drift Diffusion Models (and related sequential sampling models) are used widely in psychology and cognitive neuroscience to study decision making. Since Docker requires a lot of RAM, I don’t recommend this. We can start docker manually when we want to use it. To disable starting docker at startup, right click on the docker Icon in the task bar. Click on Settings, under General Tab, uncheck, Start Docker when you login.

Customized base image for Telekube that enables GPU support. By continuumio. Updated 3 years ago.

说明¶

Docker Hub 镜像缓存

使用说明¶

注意

由于访问原始站点的网络带宽等条件的限制,导致 Docker Hub, Google Container Registry (gcr.io) 与 Quay Container Registry (quay.io) 的镜像缓存处于基本不可用的状态。故从 2020 年 4 月起,从科大校外对 Docker Hub 镜像缓存的访问会被 302 重定向至其他国内 Docker Hub 镜像源。从 2020 年 8 月 16 日起,从科大校外对 Google Container Registry 的镜像缓存的访问会被 302 重定向至阿里云提供的公开镜像服务(包含了部分 gcr.io 上存在的容器镜像);从科大校外对 Quay Container Registry 的镜像缓存的访问会被 302 重定向至源站。

本文档结尾提供了搭建本地镜像缓存的方式,以供参考。

2020/08/21 更新:考虑到 gcr 镜像重定向至阿里云提供的公开镜像服务可能存在的安全隐患(见 mirrorhelp#158),目前校外对 gcr 镜像的访问返回 403。

注意

2020 年 11 月后,Docker Hub 将新增 访问速率限制,这可能导致在校内使用 Docker Hub 镜像缓存时出现间歇性的问题。

Linux¶

对于使用 upstart 的系统(Ubuntu 14.04、Debian 7 Wheezy),在配置文件 /etc/default/docker 中的 DOCKER_OPTS 中配置Hub地址:

重新启动服务:

对于使用 systemd 的系统(Ubuntu 16.04+、Debian 8+、CentOS 7), 在配置文件 /etc/docker/daemon.json 中加入:

重新启动 dockerd:

macOS¶

旧版本:

打开 “Docker.app”

进入偏好设置页面(快捷键

⌘,)打开 “Daemon” 选项卡

在 “Registry mirrors” 中添加

https://docker.mirrors.ustc.edu.cn/点击下方的 “Apply & Restart” 按钮

新版本:

打开 “Docker.app”

进入偏好设置页面(快捷键

⌘,)打开 “Docker Engine” 选项卡

参考 Linux 中 “使用 systemd 系统” 的配置,在 JSON 配置中添加

'registry-mirrors'一项。

Windows¶

旧版本:

在系统右下角托盘 Docker 图标内右键菜单选择 Settings ,打开配置窗口后左侧导航菜单选择 Daemon 。在 Registrymirrors 一栏中填写地址 https://docker.mirrors.ustc.edu.cn/ ,之后点击 Apply 保存后 Docker 就会重启并应用配置的镜像地址了。

新版本:

在系统右下角托盘 Docker 图标内右键菜单选择 Settings ,打开配置窗口后左侧导航菜单选择 DockerEngine 。参考 Linux 中 “使用 systemd 系统” 的配置,在 JSON 配置中添加 'registry-mirrors' 一项 ,之后点击 “Apply & Restart” 保存并重启 Docker 即可。

检查 Docker Hub 是否生效¶

在命令行执行 dockerinfo ,如果从结果中看到了如下内容,说明配置成功。

如何搭建本地镜像缓存?¶

由于镜像站目前暂不为校外提供容器镜像缓存服务,如果需要自行搭建本地镜像缓存,可以参考以下的方式:

Redis 容器:

镜像缓存容器:

/srv/docker/dockerhub/config.yml 的参考内容:

相关链接¶

- Docker 主页

- Docker Hub

Learn how to use a custom Docker base image when deploying trained models with Azure Machine Learning.

Azure Machine Learning will use a default base Docker image if none is specified. You can find the specific Docker image used with azureml.core.runconfig.DEFAULT_CPU_IMAGE. You can also use Azure Machine Learning environments to select a specific base image, or use a custom one that you provide.

A base image is used as the starting point when an image is created for a deployment. It provides the underlying operating system and components. The deployment process then adds additional components, such as your model, conda environment, and other assets, to the image.

Typically, you create a custom base image when you want to use Docker to manage your dependencies, maintain tighter control over component versions or save time during deployment. You might also want to install software required by your model, where the installation process takes a long time. Installing the software when creating the base image means that you don't have to install it for each deployment.

Important

When you deploy a model, you cannot override core components such as the web server or IoT Edge components. These components provide a known working environment that is tested and supported by Microsoft.

Warning

Microsoft may not be able to help troubleshoot problems caused by a custom image. If you encounter problems, you may be asked to use the default image or one of the images Microsoft provides to see if the problem is specific to your image.

Anaconda Docker

This document is broken into two sections:

- Create a custom base image: Provides information to admins and DevOps on creating a custom image and configuring authentication to an Azure Container Registry using the Azure CLI and Machine Learning CLI.

- Deploy a model using a custom base image: Provides information to Data Scientists and DevOps / ML Engineers on using custom images when deploying a trained model from the Python SDK or ML CLI.

Prerequisites

- An Azure Machine Learning workspace. For more information, see the Create a workspace article.

- The Azure Machine Learning SDK.

- The Azure CLI.

- The CLI extension for Azure Machine Learning.

- An Azure Container Registry or other Docker registry that is accessible on the internet.

- The steps in this document assume that you are familiar with creating and using an inference configuration object as part of model deployment. For more information, see Where to deploy and how.

Create a custom base image

The information in this section assumes that you are using an Azure Container Registry to store Docker images. Use the following checklist when planning to create custom images for Azure Machine Learning:

Will you use the Azure Container Registry created for the Azure Machine Learning workspace, or a standalone Azure Container Registry?

When using images stored in the container registry for the workspace, you do not need to authenticate to the registry. Authentication is handled by the workspace.

Warning

The Azure Container Registry for your workspace is created the first time you train or deploy a model using the workspace. If you've created a new workspace, but not trained or created a model, no Azure Container Registry will exist for the workspace.

When using images stored in a standalone container registry, you will need to configure a service principal that has at least read access. You then provide the service principal ID (username) and password to anyone that uses images from the registry. The exception is if you make the container registry publicly accessible.

For information on creating a private Azure Container Registry, see Create a private container registry.

For information on using service principals with Azure Container Registry, see Azure Container Registry authentication with service principals.

Azure Container Registry and image information: Provide the image name to anyone that needs to use it. For example, an image named

myimage, stored in a registry namedmyregistry, is referenced asmyregistry.azurecr.io/myimagewhen using the image for model deployment

Image requirements

Azure Machine Learning only supports Docker images that provide the following software:

- Ubuntu 16.04 or greater.

- Conda 4.5.# or greater.

- Python 3.6+.

To use Datasets, please install the libfuse-dev package. Also make sure to install any user space packages you may need.

Azure ML maintains a set of CPU and GPU base images published to Microsoft Container Registry that you can optionally leverage (or reference) instead of creating your own custom image. To see the Dockerfiles for those images, refer to the Azure/AzureML-Containers GitHub repository.

For GPU images, Azure ML currently offers both cuda9 and cuda10 base images. The major dependencies installed in these base images are:

| Dependencies | IntelMPI CPU | OpenMPI CPU | IntelMPI GPU | OpenMPI GPU |

|---|---|---|---|---|

| miniconda | 4.5.11 | 4.5.11 | 4.5.11 | 4.5.11 |

| mpi | intelmpi2018.3.222 | openmpi3.1.2 | intelmpi2018.3.222 | openmpi3.1.2 |

| cuda | - | - | 9.0/10.0 | 9.0/10.0/10.1 |

| cudnn | - | - | 7.4/7.5 | 7.4/7.5 |

| nccl | - | - | 2.4 | 2.4 |

| git | 2.7.4 | 2.7.4 | 2.7.4 | 2.7.4 |

The CPU images are built from ubuntu16.04. The GPU images for cuda9 are built from nvidia/cuda:9.0-cudnn7-devel-ubuntu16.04. The GPU images for cuda10 are built from nvidia/cuda:10.0-cudnn7-devel-ubuntu16.04.

Important

When using custom Docker images, it is recommended that you pin package versions in order to better ensure reproducibility.

Get container registry information

In this section, learn how to get the name of the Azure Container Registry for your Azure Machine Learning workspace.

Warning

The Azure Container Registry for your workspace is created the first time you train or deploy a model using the workspace. If you've created a new workspace, but not trained or created a model, no Azure Container Registry will exist for the workspace.

If you've already trained or deployed models using Azure Machine Learning, a container registry was created for your workspace. To find the name of this container registry, use the following steps:

Open a new shell or command-prompt and use the following command to authenticate to your Azure subscription:

Follow the prompts to authenticate to the subscription.

Tip

After logging in, you see a list of subscriptions associated with your Azure account. The subscription information with

isDefault: trueis the currently activated subscription for Azure CLI commands. This subscription must be the same one that contains your Azure Machine Learning workspace. You can find the subscription ID from the Azure portal by visiting the overview page for your workspace. You can also use the SDK to get the subscription ID from the workspace object. For example,Workspace.from_config().subscription_id.To select another subscription, use the

az account set -s <subscription name or ID>command and specify the subscription name or ID to switch to. For more information about subscription selection, see Use multiple Azure Subscriptions.Use the following command to list the container registry for the workspace. Replace

<myworkspace>with your Azure Machine Learning workspace name. Replace<resourcegroup>with the Azure resource group that contains your workspace:Tip

If you get an error message stating that the ml extension isn't installed, use the following command to install it:

The information returned is similar to the following text:

The

<registry_name>value is the name of the Azure Container Registry for your workspace.

Build a custom base image

The steps in this section walk-through creating a custom Docker image in your Azure Container Registry. For sample dockerfiles, see the Azure/AzureML-Containers GitHub repo).

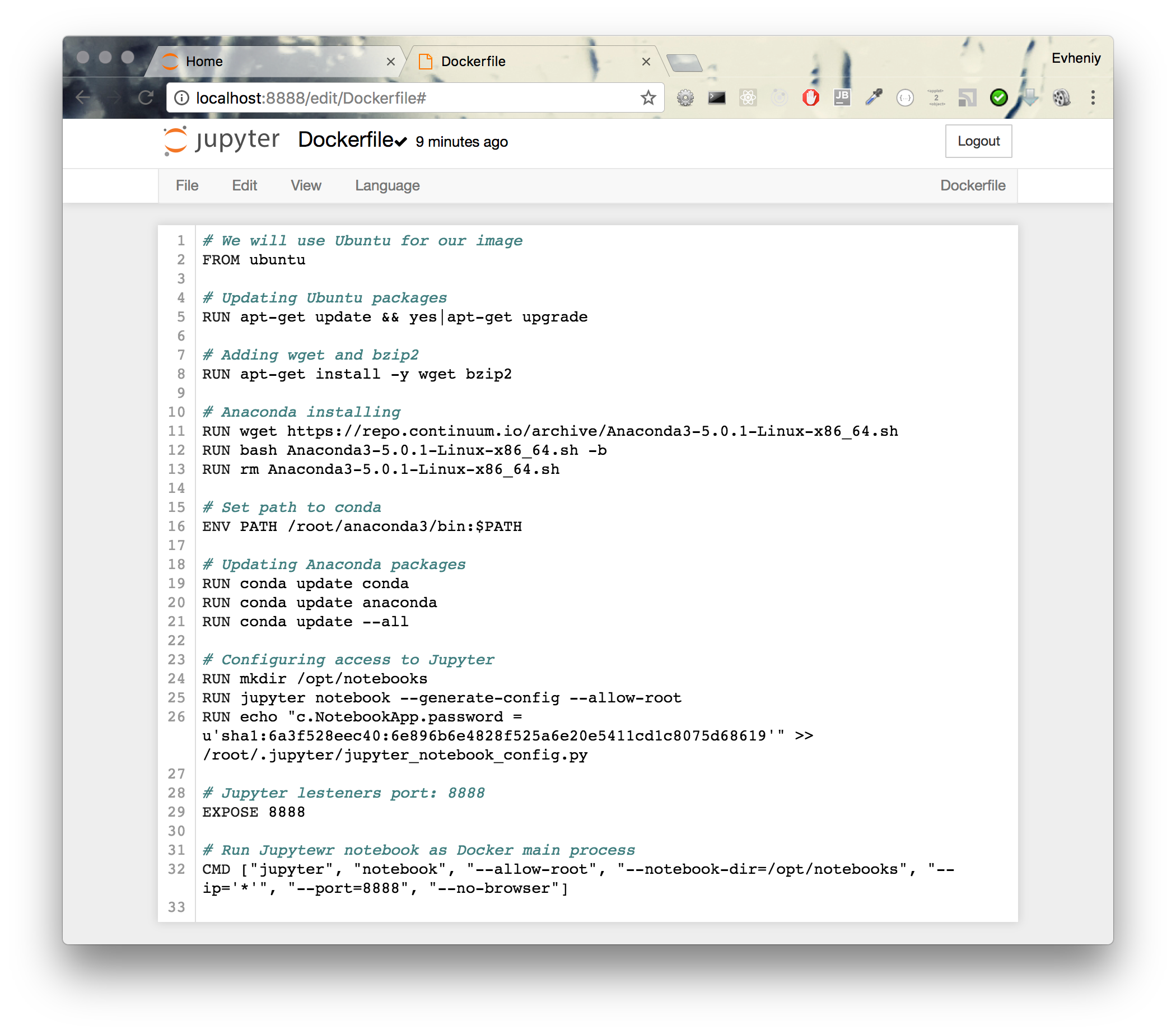

Create a new text file named

Dockerfile, and use the following text as the contents:From a shell or command-prompt, use the following to authenticate to the Azure Container Registry. Replace the

<registry_name>with the name of the container registry you want to store the image in:To upload the Dockerfile, and build it, use the following command. Replace

<registry_name>with the name of the container registry you want to store the image in:Tip

In this example, a tag of

:v1is applied to the image. If no tag is provided, a tag of:latestis applied.During the build process, information is streamed to back to the command line. If the build is successful, you receive a message similar to the following text:

For more information on building images with an Azure Container Registry, see Build and run a container image using Azure Container Registry Tasks

For more information on uploading existing images to an Azure Container Registry, see Push your first image to a private Docker container registry.

Use a custom base image

To use a custom image, you need the following information:

The image name. For example,

mcr.microsoft.com/azureml/o16n-sample-user-base/ubuntu-miniconda:latestis the path to a simple Docker Image provided by Microsoft.Important

For custom images that you've created, be sure to include any tags that were used with the image. For example, if your image was created with a specific tag, such as

:v1. If you did not use a specific tag when creating the image, a tag of:latestwas applied.If the image is in a private repository, you need the following information:

- The registry address. For example,

myregistry.azureecr.io. - A service principal username and password that has read access to the registry.

If you do not have this information, speak to the administrator for the Azure Container Registry that contains your image.

- The registry address. For example,

Publicly available base images

Microsoft provides several docker images on a publicly accessible repository, which can be used with the steps in this section:

| Image | Description |

|---|---|

mcr.microsoft.com/azureml/o16n-sample-user-base/ubuntu-miniconda | Core image for Azure Machine Learning |

mcr.microsoft.com/azureml/onnxruntime:latest | Contains ONNX Runtime for CPU inferencing |

mcr.microsoft.com/azureml/onnxruntime:latest-cuda | Contains the ONNX Runtime and CUDA for GPU |

mcr.microsoft.com/azureml/onnxruntime:latest-tensorrt | Contains ONNX Runtime and TensorRT for GPU |

mcr.microsoft.com/azureml/onnxruntime:latest-openvino-vadm | Contains ONNX Runtime and OpenVINO for Intel Vision Accelerator Design based on MovidiusTM MyriadX VPUs |

mcr.microsoft.com/azureml/onnxruntime:latest-openvino-myriad | Contains ONNX Runtime and OpenVINO for Intel MovidiusTM USB sticks |

For more information about the ONNX Runtime base images see the ONNX Runtime dockerfile section in the GitHub repo.

Tip

Since these images are publicly available, you do not need to provide an address, username or password when using them.

For more information, see Azure Machine Learning containers repository on GitHub.

Use an image with the Azure Machine Learning SDK

To use an image stored in the Azure Container Registry for your workspace, or a container registry that is publicly accessible, set the following Environment attributes:

docker.enabled=Truedocker.base_image: Set to the registry and path to the image.

To use an image from a private container registry that is not in your workspace, you must use docker.base_image_registry to specify the address of the repository and a user name and password:

You must add azureml-defaults with version >= 1.0.45 as a pip dependency. This package contains the functionality needed to host the model as a web service. You must also set inferencing_stack_version property on the environment to 'latest', this will install specific apt packages needed by web service.

After defining the environment, use it with an InferenceConfig object to define the inference environment in which the model and web service will run.

At this point, you can continue with deployment. For example, the following code snippet would deploy a web service locally using the inference configuration and custom image:

For more information on deployment, see Deploy models with Azure Machine Learning.

For more information on customizing your Python environment, see Create and manage environments for training and deployment.

Use an image with the Machine Learning CLI

Important

Anaconda Docker Image

Currently the Machine Learning CLI can use images from the Azure Container Registry for your workspace or publicly accessible repositories. It cannot use images from standalone private registries.

Before deploying a model using the Machine Learning CLI, create an environment that uses the custom image. Then create an inference configuration file that references the environment. You can also define the environment directly in the inference configuration file. The following JSON document demonstrates how to reference an image in a public container registry. In this example, the environment is defined inline:

This file is used with the az ml model deploy command. The --ic parameter is used to specify the inference configuration file.

For more information on deploying a model using the ML CLI, see the 'model registration, profiling, and deployment' section of the CLI extension for Azure Machine Learning article.

Next steps

Anaconda Docker Hub

- Learn more about Where to deploy and how.

- Learn how to Train and deploy machine learning models using Azure Pipelines.